mathematical convex optimization

Most optimizations can be expressed as the minimization of an objective function subject to a set of constraints both of which are defined over a set of optimization variables:

- optimization variables

- objective function:

- constraints:

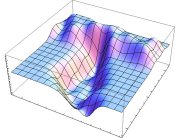

The optimization variables are typically actions (portfolio optimization: amounts invested in various assets) or parameters (data fitting: parameters of the model being estimated). There exists a family of optimization problems for which finding a global solution can be done both efficiently and reliably; for an optimization to be convex the objective function and the constraints must all have positive curvature.

Some examples include least squares (no constraints, has an analytical solution), linear programming (zero curvature objective and constraints, no analytical solution), quadratic optimization (quadratic objective function and either linear or non-linear constraints) are examples.

For details click here.

leave a comment