q principal component analysis

PCA is a pattern recognition algorithm that highlights similarities and differences in a dataset. It can also be used to optimally (least information loss) reduce the dimension (given by the number of variates) of a dataset by projecting the data (in the least squares sense) onto a few of its principal (high variability) components (dimensions in transformed space). A reduction to 2 components (best approximating plane) can be used to capture and visualize the majority of the variance in the dataset.

The components correspond to the new transformed basis and don’t necessarily have a one-to-one correspondence with the original variates; there are two ways to think about why we would consider dropping components in the transformed space:

- if certain variates are redundant (express almost the same information i.e. are highly correlated) then it makes sense to include only a single component to record the information contained in the variates

- by rotating and stretching the data into a new basis we are left with components along which there is very little variance; intuitively these components correspond to noise since they fail to record any essential information

Since we are comparing the spreads of variates it makes sense to standardize the data to have zero mean and unit standard deviation.

Given a dataset where the

columns are sample vectors and the

rows are feature vectors, we are interested in finding directions in

(the row space) that capture maximal variance. We calculate the center of mass

which gives us the mean of each row. The center of mass will serve as the origin of the new basis.

Next we calculate the scatter matrix as the product of the demeaned dataset (subtract the mean of each row from the row so that all the rows have mean zero) with a transposed version of itself: or in matrix form

where

is the centering matrix. The entries of the scatter matrix measure the variance of the data in different directions relative to the center of mass; specifically the i-j th entry is the covariance between the ith and jth feature.

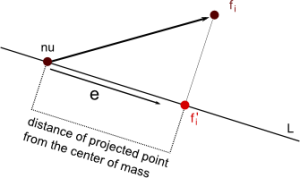

Since we are rotating our data into a new basis, what we will need is a measure of the variance of the data set projected onto each axis (infact any arbitrary axis since we need the one with highest variance). To illustrate how we can build such a measure (using only the scatter matrix and any arbitrary unit vector direction) let us project the data onto a line representing an axis in the direction

such that

The distance of the projected point from the center of mass

is then

So the total variance or the squared sum of the distances of all the projected points from the center of mass is given by:

which after some matrix algebra works out to .

So now we can calculate the variance of the data projected onto some arbitrary direction ; the principal component is precisely the direction that maximizes this variance:

subject to

. The lagrangian of this optimization is given by

where the

is a slack variable or lagrange multiplier. We apply the lagrangian saddle point equivalence theorem and differentiate with respect to the primal variables

or

which is in fact part of the eigen-decomposition of the scatter matrix. So the direction along which the projected data has maximized variance is an eigen-vector; but which one? The variance of an eigen-vector is given by

and since we are maximizing the variance

the eigen-vector with a corresponding maximal eigen-value

is the principal component.

Note if the eigen-values are nearly the same then there is no preferable “principal” component. Also pca is optimal only in the least-squares sense.

Connection to SVD

The svd of the scatter matrix is and since the scatter matrix is symmetric

, we have

which is satisfied only when

so we have

which is exactly the eigen-decomposition so that for

the eigen-values are the singular values. Look here for the general relationship between the eigendecomposition and the svd when the matrix is not square.

Outline of PCA algorithm

- Calculate the scatter matrix S

- Calculate the SVD of

- Rearrange the singular values and singular vectors:

and

- Select a new basis as a subset of the singular vectors

- Project the data onto the new basis

.qml.mpca:{[x;r] \x is samples (rows) by variates (cols)

t:-[;avg x] each x;

t: t $ flip t;

s:.qml.msvd[t];

u:s[0][;1+til r];

:flip[u] $ x;

};

m:10;

n:800;

x:(m;n)#(m*n)?1f;

.qml.mpca[x;2]

Kernel PCA

Is an extension of PCA for non-linear distributions. We would like to apply PCA in a higher dimensional space ; the application of this map to the data set is unnecessary because of the kernel trick. We simply form the kernel matrix and solve this dual problem by finding its singular value decomposition;

. A feature

in the higher dimensional feature space is given by

where

is a feature in the original feature space. For a derivation of this result look here or here

Variance Connection, Karhunen Connection

A typical data matrix contains signals each of which has some underlying noise; a reduced rank SVD approximation of the data matrix captures a highest variance mixture of the original signals in a lower dimensional space. The noise is usually associated with the smallest singular values (outliers generally have the least to say about the true distribution of the data because well it is noise and doesn’t belong in the dataset; they have the smallest semi-axis in the transformed unit sphere i.e. they are what the fuck can i explain this better?) which are dropped to form the approximation.

the karhunen–loève representation of a stochastic process

Consider a continuous time, continuous state space stochastic process that has a continuous and compact index set

and where the random variables

are measurable functions from a probability space to a (continuous, measurable) state space:

Assume the stochastic process is centered (each random variable is centered) so that then the autocovariance (the variance of the process or signal against a time shifted version of itself) is given by

which given our assumption can be viewed as a kernel

Let us assume that this kernel is positive, symmetric and square integrable then the resulting integral operator

is positive, self-adjoint and compact. It therefore follows from the Spectral Decomposition Theorem that must have a countable set of non-negative eigenvalues

; furthermore, the corresponding eigenfunctions

must form an orthonormal basis for

:

The integral transformation essentially changes the representation of the input function

to an output function

defined in a space that has a basis given by the set of eigenfunctions.

Let us make a stronger assumption (than being square integrable) that the covariance (kernel) function is continuous then by Mercer’s theorem (page 34) we have that:

where convergence is absolute and uniform in both variables. We define a new set of random variables based on our original stochastic process:

that are orthogonal and centered . We can then represent our original stochastic process as an infinite linear combination of orthogonal functions:

where convergence is uniform over the index set . It is interesting to note that the orthogonal basis functions used in this expansion are determined by the autocovariance function.

References:

1. Karhunen-Loeve Expansion of Vector Random Processes

2. Large Scale Learning: Dimensionality Reduction

singular value decomposition

The singular value decomposition of a matrix

is given by

where the rows or right-singular vectors of (and the columns or left-singular vectors of

) form a set of orthonormal basis vector directions:

and

The singular value matrix is diagonal with entries equal to the square roots of the eigenvalues of the matrix

. If the diagonal entries

are sorted in decreasing order then

is uniquely determined. The same is not true of the left and right singular vectors.

As a geometric transform

Any real matrix maybe viewed as a geometric transformation

that when applied to a set of data points rotates them in the direction of the right singular vectors

first and then stretches them in the direction of each of the left singular vectors

by a factor equal to its corresponding singular values

. The order of the rotate and stretch is important as it determines the final output space. Note that from

and

it is clear that the transformation changes the underlying basis; the SVD stretches and rotates the dataset into a space whose basis is given by the set of right singular vectors. If for example we were to apply

to the unit sphere, it is transformed (rotated and stretched) into an ellipsoid whose semi-axes are given by the singular values of

.

As a linear combination

Another way to see this factorization is as a weighted ordered sum of separable matrices each of which has

:

As an unconstrained optimization

We can also formulate the solution of the svd in terms of an optimization: find the best (minimizes the frobenius norm ) approximation

of

that has lower rank

. Dropping an appropriate number of singular values (starting with the smallest) and singular vectors and then reforming a matrix yields the exact global solution. The proof of this lies in the fact that

This result is the Eckhart-Young theorem.

Condition Number

The condition number of a matrix can be given in terms of its singular values:

A matrix is said to be ill conditioned if the condition number is too large. How large the condition number can be, before the matrix is ill conditioned, is determined by the machine precision.

10 comments